Stream chat to your frontend with SSE in ASP.NET Core (.NET 10)

Server-Sent Events (SSE) is my favorite way to deliver token-by-token chat output to the browser. It’s simple, HTTP-friendly, and supported natively in modern browsers via EventSource. In this post, I’ll show how my streaming endpoint works end-to-end with ASP.NET Core (.NET 10) and Semantic Kernel (SK), then how the frontend listens and renders tokens.

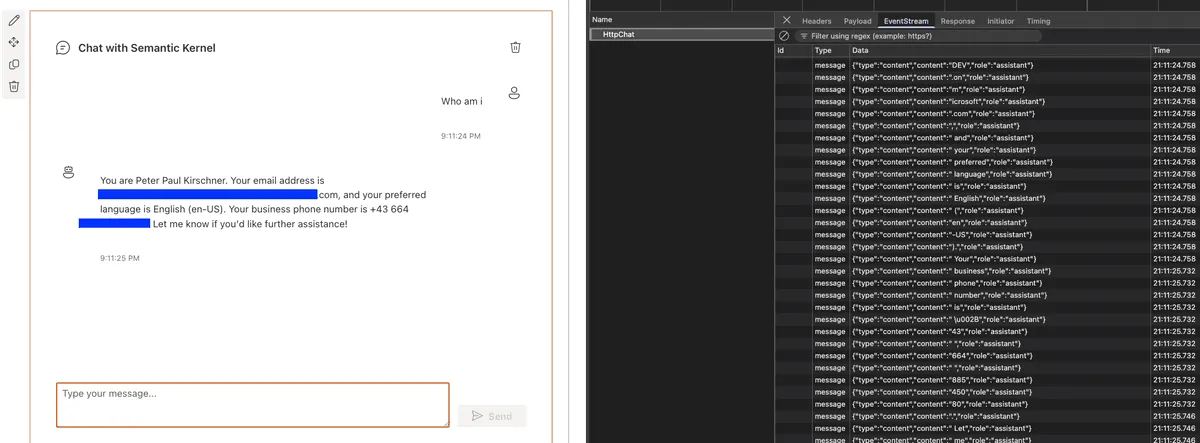

I’ll add a network tab screenshot here to show the text/event-stream response and individual event frames.

Why SSE for chat

- Low-latency: tokens appear as they’re generated; no need to wait for the full response.

- Simple transport: one long-lived HTTP response with content-type

text/event-stream. - Built-in reconnection: the browser’s EventSource automatically retries.

Architecture

Frontend (EventSource) → SSE endpoint (/api/chat/stream) → Semantic Kernel chat service (streaming) → writes data: <delta> frames → flush → browser appends to UI.

Backend: ASP.NET Core SSE endpoint

Below is a minimal, production-ready pattern using ASP.NET Core Minimal APIs. It uses Semantic Kernel under the hood to talk to your chosen model (Azure OpenAI, OpenAI, or local). The important parts are:

- Set

Content-Type: text/event-streamand disable response buffering. - Iterate the streaming chat and write

data: ...\n\nframes. - Flush after each chunk.

- Send a final

event: donemarker.

using Microsoft.SemanticKernel;

using Microsoft.SemanticKernel.ChatCompletion;

using Azure.Identity; // optional, if you use Managed Identity

var builder = WebApplication.CreateBuilder(args);

// 1) Register SK and your chat completion service of choice

builder.Services.AddSingleton<IChatCompletionService>(sp =>

{

var kb = Kernel.CreateBuilder();

// Example: Azure OpenAI with Managed Identity (adjust to your environment)

// kb.AddAzureOpenAIChatCompletion(

// deploymentName: Environment.GetEnvironmentVariable("AZURE_OPENAI_DEPLOYMENT")!,

// endpoint: new Uri(Environment.GetEnvironmentVariable("AZURE_OPENAI_ENDPOINT")!),

// credential: new DefaultAzureCredential());

// Example: OpenAI API key

// kb.AddOpenAIChatCompletion(

// modelId: "gpt-4o-mini",

// apiKey: Environment.GetEnvironmentVariable("OPENAI_API_KEY")!);

var kernel = kb.Build();

return kernel.GetRequiredService<IChatCompletionService>();

});

var app = builder.Build();

// 2) SSE endpoint (GET). For POST bodies, prefer fetch streaming; EventSource only supports GET.

app.MapGet("/api/chat/stream", async (HttpContext http, IChatCompletionService chat, string q) =>

{

http.Response.Headers.CacheControl = "no-cache";

http.Response.Headers.Connection = "keep-alive";

http.Response.Headers["Content-Type"] = "text/event-stream";

var history = new ChatHistory();

history.AddSystemMessage("You are a concise, helpful assistant.");

history.AddUserMessage(q);

// Stream tokens from SK and forward as SSE frames

await foreach (var chunk in chat.GetStreamingChatMessageContentsAsync(history))

{

if (!string.IsNullOrEmpty(chunk.Content))

{

await http.Response.WriteAsync($"data: {chunk.Content}\n\n");

await http.Response.Body.FlushAsync();

}

}

// Send a final event so the client knows we’re done

await http.Response.WriteAsync("event: done\n");

await http.Response.WriteAsync("data: end\n\n");

await http.Response.Body.FlushAsync();

});

app.Run();Notes:

- If you need richer framing, you can also emit

id:andevent:lines for each message. - For long streams, send a periodic heartbeat comment (

:\n\n) to keep intermediaries from closing idle connections. - If you need POST (request body too large for a querystring), use

fetch()streaming instead of EventSource (see below).

Frontend: EventSource listener (browser)

This is the simplest client: it connects and appends tokens as they arrive. Works in all modern browsers.

<div id="out"></div>

<script>

const out = document.getElementById('out');

const q = encodeURIComponent('Explain SSE in two bullets.');

const es = new EventSource(`/api/chat/stream?q=${q}`);

es.onmessage = (ev) => {

out.textContent += ev.data;

};

es.addEventListener('done', () => {

es.close();

});

es.onerror = (err) => {

console.error('SSE error', err);

es.close();

};

</script>Alternative: fetch() streaming (POST support)

If you need to send a complex payload (files, RAG parameters, tools), use fetch with a POST endpoint that streams chunks in the response body.

async function streamChat(body: unknown) {

const res = await fetch('/api/chat/stream-post', {

method: 'POST',

headers: { 'Content-Type': 'application/json' },

body: JSON.stringify(body)

});

const reader = res.body!.getReader();

const decoder = new TextDecoder();

let text = '';

while (true) {

const { done, value } = await reader.read();

if (done) break;

text += decoder.decode(value, { stream: true });

// Append to UI...

}

return text;

}On the server, implement a POST endpoint that writes plain text chunks and flushes. You can still use SK’s streaming enumerator; you just won’t frame it as SSE, which is fine for fetch.

How SSE works (quick refresher)

- Content-Type is

text/event-stream. The server keeps the connection open. - Each message is one or more

data:lines followed by a blank line (\n\n). - Optional fields:

event:(named event),id:(for resume),retry:(reconnect delay). - Browsers reconnect automatically. You can support resume by emitting

id:and readingLast-Event-ID.

Production tips

- CORS: enable only for your frontend origin. SSE uses GET; ensure credentials policy matches your needs.

- Reverse proxies: keep-alive and buffering settings matter. For Nginx/Apache/Azure Front Door, disable response buffering for

text/event-stream. - Heartbeats: write

:\n\nevery ~15–30s to keep load balancers from timing out. - Error handling: consider emitting a final

event: errorframe with a sanitized message before closing. - Telemetry: log start/stop and token counts at the server, not inside the tight write loop.

What I’ll add next

- A devtools Network screenshot showing the event stream frames.

- A small retry/backoff wrapper for EventSource.

- A POST streaming variant wired to a RAG pipeline.

References

- SSE spec: https://html.spec.whatwg.org/multipage/server-sent-events.html

- Semantic Kernel chat completion (C#): https://learn.microsoft.com/semantic-kernel/concepts/ai-services/chat-completion/

- ASP.NET Core response streaming docs: https://learn.microsoft.com/aspnet/core/fundamentals/middleware/request-response?view=aspnetcore-8.0#response-streaming